Machine learning involves the process of using technology like tablets and computers to learn through programming algorithms and data analysis. Even though it may appear like something from the future, this technology is a part of life for many people without their knowledge. VAs such as Siri and Alexa utilize algorithms based on machine learning to perform various functions, including issuing reminders, responding to inquiries, and executing user commands. As a result, the availability of machine learning has been accompanied by a rise in the number of people who are taking up machine learning as their profession.

Machine learning engineering involves real-world project exposure, enrolling in classes, and using free resources available on the internet. The success of applying machine learning projects depends on the wise choice of advanced tools and technology, which should be done by considering several essential factors.

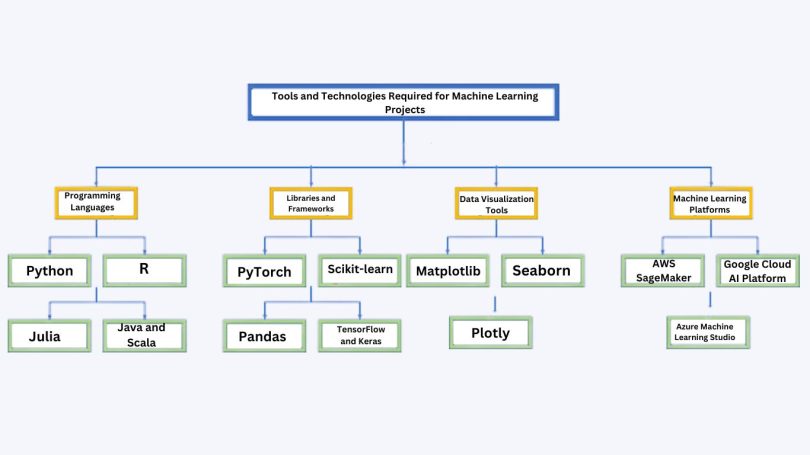

Having a wide range of tools and technologies to work with is imperative for the successful implementation of machine learning projects, starting with data collection, preprocessing and building, training and deploying machine learning models. The choice of such tools is limited to the capacity, complexity, and purpose of the project. The following delineates the fundamental tools and technologies requisite for machine learning endeavors.

Programming Languages:

Python: It is deferentially known as the first choice language for machine learning because of its simplicity, followed by its extensive library support (e.g., TensorFlow, PyTorch, Scikit-learn).

R: Such software is much preferred to numerical and graphical analysis, mostly used in academic and research fields.

Julia: Ascend for high-performance machine learning thanks to its speed and efficiency superiorities.

Java and Scala: Commonly used in deployments of machine learning models into production environments as well as to scale big data ecosystems.

Libraries and Frameworks

TensorFlow and Keras: Math libraries that use open-source code to allow for numerical computation and machine learning, performing at the pace that enables the construction and training of models at scale.

PyTorch: Presented by Facebook, a library of open-source machine learning functionalities with high customizability and vigor through the core computational graph.

Scikit-learn: A Python library offering user-friendly and efficient tools for data mining and analysis, constructed upon the foundation of “NumPy”, “SciPy” and “Matplotlib”.

Pandas: A Python library providing the capabilities of top-notch, effortless and data-centric data structures and data analysis.

NumPy and SciPy: Necessary packages for scientific computing within Python, include abilities of matrix operations, Fourier transforms and random number generation.

Data Visualization Tools:

Matplotlib: A 2D plotting library with Python that provides a high level of flexibility in the generation of plot data for the user in different formats like raster and vector and interactive environments (especially web).

Seaborn: A Python visualization library which uses Matplotlib as the base, and just a high abstraction level interface for building attractive statistical graphics.

Plotly: A library for graph plotting allowing one to create professional graphs with interactive features online.

Integrated Development Environment (IDE) and Notebooks:

Jupyter Notebook: A web application with the in-built functionality to generate and share documents that contain “code”, “equations”, “visual content” and “text” in a seamless fashion.

Google Colab: A replica Jupyter Notebook apparatus that is already up and running without setup, this cloud-based environment grants free access to computing resources, especially the GPU.

PyCharm, Visual Studio Code and Spyder: While there are tons of prominent IDEs (Integrated Development Environments) that come with advanced coding, debugging and testing features for Python development, it is recommended to choose one that suits your needs.

Big Data Technologies :

Apache Hadoop: A platform structured for processing huge datasets through the use of clusters making the programming process simple.

Apache Spark: An open-source distributed system API that comprises the whole cluster clusters able to work with data parallelism and self-healing features.

Machine Learning Platforms:

AWS SageMaker, Google Cloud AI Platform and Azure Machine Learning Studio: Cloud-based systems providing the necessary instruments to create, train and operate machine learning models at scale, the availability of computing sources and the managed services for data processing and model serving.

Model Deployment, Serving Tools and Deployments

Docker: A platform that gives application developers the ability to create and execute their apps, besides that, the platform enables the segregation of applications from the infrastructure underneath.

Kubernetes: An open-source framework that automates the deployment and management of container apps.

TFServing, TorchServe: Feature tools that are specifically designed to use inside production environments for serving the models created using TensorFlow and PyTorch.

Branching, Merging and Team Collaboration functions

Git: Distributed version control system, be it free of charge and open-source, developed with the goal of efficient project management of varying scales.

GitHub, GitLab, Bitbucket: Platforms running the software development and version control applications like Git.

Data Storage and Management:

When managing machine learning projects, effective data storage and management are the two most critical factors that determine the success and efficiency of these endeavors. The following categories encompass prevalent tools and technologies utilized for this purpose…

SQL Databases: SQL-based relational database management systems (e.g., MySQL, PostgreSQL) that utilize the SQL interface to process, query and modify data tables in a structured way.

NoSQL Databases: (e.g., MongoDB, Cassandra) Database management systems designed to accommodate diverse data formats in addition to the table type characterizing relational databases is one of the ways of easing the process of data management. The systems provide the flexibility in the storing and retrieving of data, particularly considering the unstructured and semi-structured data needs.

The utility of the right tools and technologies in machine learning projects needs no emphasis. This process involves a careful look at several aspects which may not be limited to the size of the data involved, the computation requirements and the deployment environment used. By aligning the chosen tools with the project’s specific needs, practitioners can optimize performance and facilitate seamless project execution.

Conclusion:

Machine learning development projects are redefining many industries, yet overcoming the complexity of tools and technologies can be overwhelming. This blog presented a detailed description of various tools and technologies that are used across different categories including programming languages, libraries and frameworks, data visualization tools, IDEs and notebooks, big data technologies, machine learning platforms, model deployment tools, and data storage and management. The decision comes down to a smart consideration of factors such as data size, computational needs and deployment environment which helps to pick the best tools for the project. This awareness not only empowers experienced professionals but also enables the rising stars of big data. Through mastery of these tools and utilizing various resources on the Internet, individuals can take their first small steps towards an exciting machine learning career. The future of machine learning is bright with endless opportunities ahead. This guide is intended to help readers understand and engage in the ongoing developments of the field.